Commercial 3-D Imaging Software Migrates to PC Medical

Diagnostics

"Advanced Imaging Magazine", Oct. 1998, pp.16-21

Predictions that volume visualization would become a simple tool of the medical office, not just high-end labs, are long standing. And now they're actually coming true.

By Yecheng Wu, Ph.D. and Len Yencharis

OpenGL support from hardware accelerator boards, more sophisticated and faster surface and volume rendering algorithms and higher speed CPUs will soon open up the 3D imaging software market to desktop users. While these applications that range from diagnostic imaging and surgical planning with 3D CT or MRI images to scientific modeling and simulation, non-destructive testing with ultrasound image processing and other image processing applications involving volumetric data that require the power of UNIX-based workstations today, the market is rapidly changing with a swift, discernible shift to lower cost PC platforms.

Over the past two decades, a significant amount of R & D effort as been focused on finding a better solution for the handling and processing of 3D volumetric images. These image operations include 3D image segmentation, 3D volume and surface rendering, and 3D image restoration by deconvolution. However, because of the complexity and huge size of volumetric data, 3D image visualization and rendering applications have been exclusively running on the high end workstations and mainframe or super computers. Most of these machines are UNIX based with a command line type user interface for these applications.

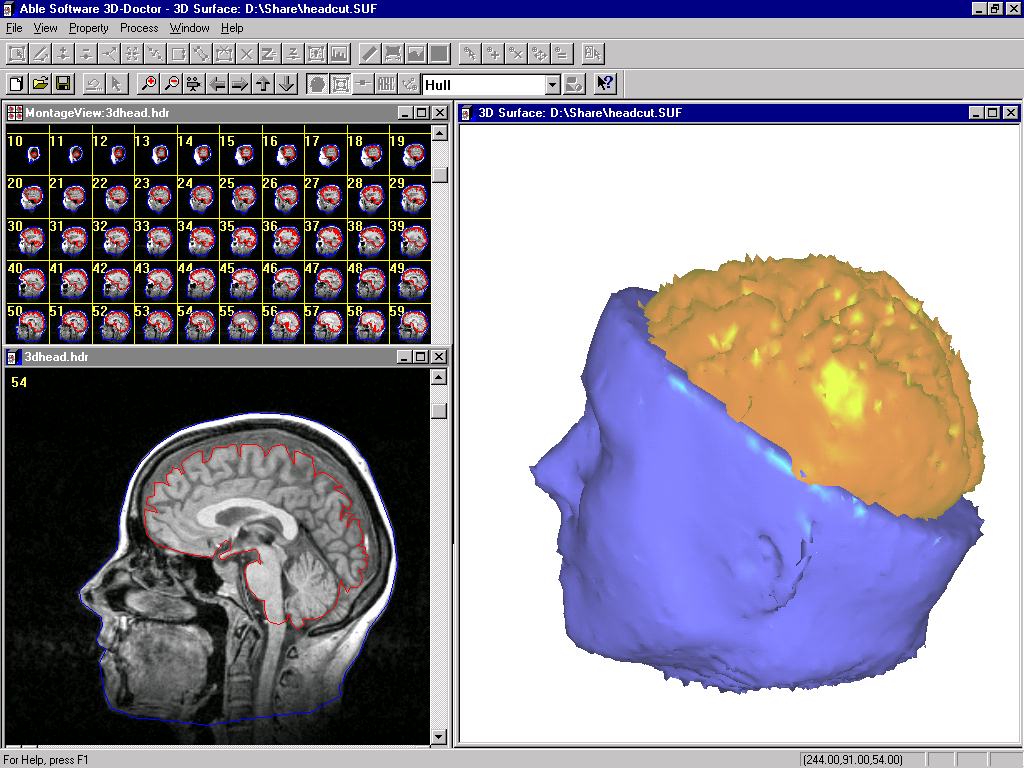

Figure 1. Raster meets vector for neurobio app...with 3D-DOCTOR

But with commercial 3D imaging software for the desktop PCs running Windows becoming available with comparable attributes for these software applications, end users are evaluating PC hardware and software development tools.

With both hardware and software support for OpenGL on the PC platform, these application developers can focus on key processing algorithms such as 3D image segmentation, feature extraction, surface generation and volume rendering. The 3D display and image handling requirements are handled very efficiently in OpenGL and interactive 3D graphics display and animation requirements become not only possible, but also practical. For example, medical doctors will be able to do all their visualization and analysis from their own desktop computer and eventually from remote locations with just a laptop and on-line access to the Internet. For other application areas such as industrial 3D CT and ultrasound image processing, 3D visualization will become a crucial tool for solutions, unlike today's specialized applications.

3D COMPLEXITIES

Most 3D image visualization and rendering applications require pre-processing of the source image, 3D segmentation to extract object boundaries, surface and volume rendering using boundary and image data. Some of the issues were considered in developing 3D-DOCTOR, a 3D imaging and rendering software and how to make the application practical for 3D rendering and visualization applications interactively on a desktop computer.

The first requirement-- OpenGL-- made its debut for the desktop with the release of Windows NT 3.5, and available for Windows 95 shortly after its release by Microsoft. OpenGL has become a de facto industry standard for 3D graphics application development. On the hardware side, there are many hardware accelerator boards that support OpenGL calls at the board level that are commercially available for applications requiring real time animation and rendering.

What was missing was a 3D Imaging Software Architecture (ISA) to take advantage of these marketing developments. 3D imaging software normally uses raster-voxel based data structures for both image handling and data representation since the source image typically consists of a 3D image voxel array. The object boundaries are recorded by encoding voxels within the object boundary. While this is a straightforward data structure as it is almost identical to the original source image, it takes a significant amount of system resource and processing power to manipulate.

Vector-based data structure uses lines and points to represent object boundaries, instead of marking each voxel in the 3D volume space. The biggest benefit using vector-based structure for object representation is that it makes 3D image segmentation more flexible and makes editing much easier than raster based methods. Vector-based structure has much less data items to deal with than raster-based structure, and handles topology more efficiently for features like islands, holes and branches and the topological relationship between them.

Although there are many algorithms have been developed in recent years for 3D image segmentation, no single algorithm can perform a perfect object boundary extraction. These editing functions are crucial for creating accurate and high quality surface and volume rendering. A vector-based boundary structure makes interactive editing easier. For operations such as moving or deleting a boundary segment only one single mouse click is needed. Conversely, the voxel based method requires modification of every single pixel involved, with a range from hundreds to more than several thousands pixels. It is time consuming and tedious and not practical for processing large number of images. On some raster voxel-based systems, creating a complete rendering of complex object such as a human brain, can take up to 20 to 40 hours for image segmentation and editing.

"3D-DOCTOR" is Windows platform 3-D image visualization software just introduced this past June by Able Software LLC. (Lexington, MA). It exploits the vector-based possibilities. And its capabilities, particularly in the hands of the sorts of imaging professionals who've "traditionally" had no choice but the "higher end" (and more expensive) UNIX packages, certainly illustrate the shift of capabilities in 1998.

NEUROLOGY APPLICATION

One of Able Software's 3D-DOCTOR users at the Department of Neurology, University of Pittsburgh, needed to create 3D brain surface rendering from MRI images. Initially, the 3D MRI images are loaded for 2D and montage display. They can be used for either interactive or fully automatic segmentation to extract both brain and head boundaries. These boundary lines are then edited using the interactive on-screen boundary line editor or global boundary line processing functions, such as smoothing, deleting noise lines, inflate or deflate lines. Once these boundary lines are cleaned up, either surface or volume rendering can be performed based on the boundaries of objects.

Because the boundary lines use a vector-based structure, the entire process can take from a few minutes to 1 or 2 hours depending on the source image quality and the complexity of certain objects. (See Fig 1) In Figure 1 a 3D MRI head image is displayed as 2D plane view and montage. The surface rendering is displayed in the upper right window. The lower right window displays the volume rendered image with the top half of the head removed.

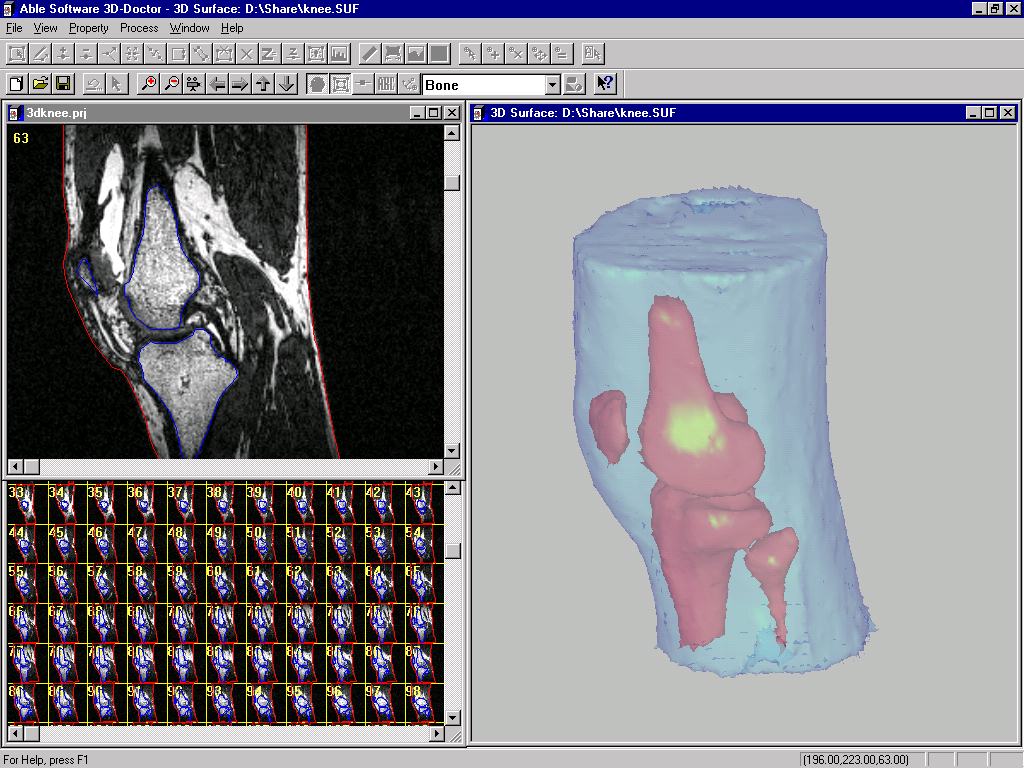

Figure 2. Volumetric rendering of a knee has needed the kick of the upper-end workstation-but that's changing fast.

Efficient Surface Rendering

In contrast to the conventional raster-based surface creation algorithm such as marching cube, vector-based surface rendering algorithms need less memory and computation time because it creates 3D surface triangles using boundary line segments and vertices directly. The tiling and adaptive Delaunay triangulation algorithms have been implemented in 3D-DOCTOR software.

Conventional raster-based or ray tracing based surface rendering algorithms have to scan the entire raster image pixel by pixel. In some cases, some algorithms can have time complexity of at least O(n4) with huge amount of memory usage, while some vector based algorithms can easily perform at O(2n) with very little memory usage. This has been the main reason why most of the current systems run only on high end workstations.

Surface and volume rendering are two different types of 3D rendering method for 3D imaging and visualization applications. Theoretically, surface rendering creates a set of 3D primitives such as triangles to form the surface of an object and use the primitives to display object surface. Final 3D modeling results from surface rendering are normally vector-based. For example, a list of 3D triangles are used to describe the surface.

On the other hand, volume rendering projects the entire 3D volume to a 2D viewing plane using ray tracing techniques. The output from volume rendering is normally a 2D raster based image, or a bitmap for display. While both rendering methods render object in 3D, they show 3D characteristics in different ways. The surface rendering is mainly targeted on creating surface properties of an object and does nothing about voxels within the object. This makes it easier to handle the display of multiple objects and their spatial relationship since only surfaces are concerned and treated either as transparent or opaque.

With the use of OpenGL, 3D surface data can be displayed quite easily with OpenGL function calls, and the viewing angle and object properties can be adjusted interactively; without any waiting. This makes surface rendering more suitable for visualizing the sizes and spatial relationship between objects. Because volume rendering ray-traces all voxels to generate a 3D view in a 2D projection plane, it not only takes into account the voxels on the object surface, but also those within the surface.

In addition to creating a display for object surface, volume rendering can show information within the object when voxels are assumed transparent. When internal structure and information needs to be visualized in 3D, volume rendering may seem to be more flexible than surface rendering. This is accurate when displaying images without distinguishable object boundaries. Examples include visualizing industrial CT image of auto parts, an application of 3D-DOCTOR used at Volkswagen AG (Germany). Other examples include 3D temperature image inside an engine cylinder obtained by infrared imaging devices or smoke passing through a chamber.

Eventually, visualization and rendering systems can be used as tools as casually accepted as a desktop spreadsheet or word processing program. For now, as the 3D-DOCTOR applications are showing, the migration to the desktop is underway in earnest.